Users often put a high premium on a SSD having DRAM. As explained in our related blog, this DRAM is usually used to store metadata, with mapping or addressing information taking up most of the space. It’s possible to use system memory, instead, with the NVMe host memory buffer (HMB) function, although there’s always a copy also on the non-volatile NAND. This will be in pSLC mode in a system reserved space for optimal performance. Latency is key for when a lot of addresses have to be looked up as with random, small I/O, but what exactly is mapping?

The original 1GB of DRAM to 1TB of NAND ratio is derived from the fact that 32-bit (4-byte) addressing is used to map 4KB logical blocks (LBA) to physical blocks (PBA). This is a type of translation between the file system (FS) and the SSD's logical storage, through the use of a flash translation layer (FTL). 4KB I/O is a typical size due to physical sector size, and even though current consumer NAND has 16KB physical pages they can be broken down into 4KB quarter-pages, logical pages, or subpages. However, drives with little or even no DRAM can still manage, not only because some of the controller's SRAM can act as a metadata cache but because consumer usage tends not to be DRAM-dependent.

"Minipage" (logical page or subpage) as 4KB out of 16KB physical pages. Source.

A good example is the newest generation of consoles, where Sony in its PlayStation 5 storage patent suggested it could get by with just a bit of SRAM. This was made possible by using larger chunks or block sizes, as will be the case with DirectStorage for PC gaming on Microsoft Windows. Data that is contiguous or coalesced does not need fine-grained, but rather coarse-grained, addressing. For example, you could take the starting address and then add a value denoting the amount of sequential pages rather than all the pages individually. This and other techniques - such as those that can be used in enterprise with ZNS and K:V configurations - can reduce the amount of memory required, which is useful even if the SSDs have plenty of on-board memory.

"...coarse granularity level such as 128 MiB." Source ([0062]).

However, as suggested in our wear leveling blog, modern SSDs may also need to track information on the block level. While pages are read and written, flash is erased at the block level. Maintaining even wear across these blocks requires data about the blocks, including the actual position within a die; flash is non-uniform due to production constraints and this can impact effective wear. Therefore, a type of hybrid mapping is utilized by tracking data at both the page- and block-level. Memory usage can be reduced through compression and metadata techniques with a balancing of this data through intelligent algorithms.

Hybrid mapping. Source.

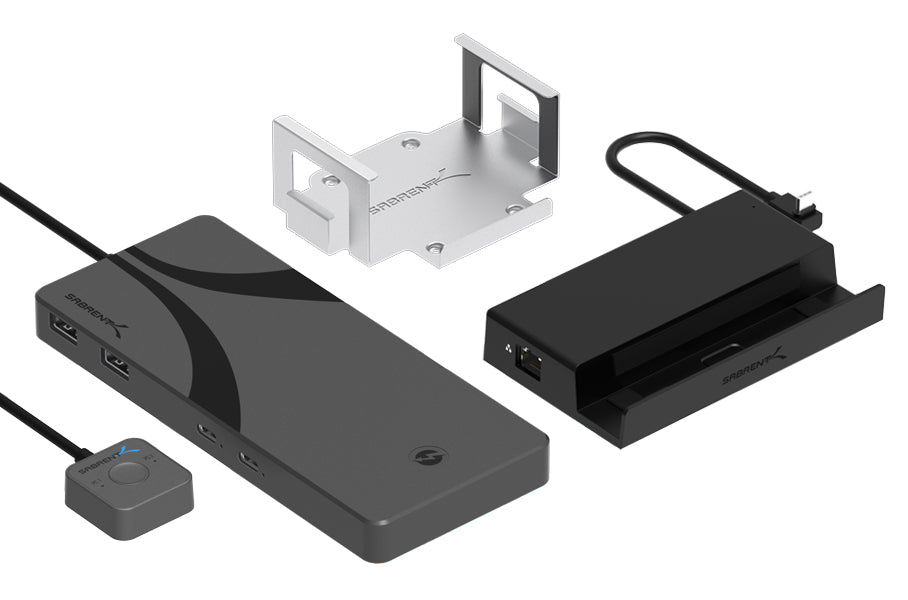

The NVMe SSDs that we sell are largely equipped with DRAM and effective mapping to keep access latencies low. If you need to do some content creation or database work, or maybe have to spin up a few virtual machines, these drives can manage excellently. Our DRAM-less drives can utilize HMB in a pinch, as well. In all cases we make sure your data is reliably accessible. We also want our SSDs ready for the future, tailoring firmware to get the most out of your storage for gaming and for the OS. Our focus is on having the fastest, largest SSDs you can buy.

Stay tuned for part two of this discussion with some further detail.